Here’s a little project from 1 in 1 for ITP30.

I want people to be able to drop their avatars into an open space and drag as far or close as they want to others. or something like that. here’s something. drop your avatar in and get close to Red.

Che-Wei Wang (last update 2013) Visit cwandt.com for the latest.

Here’s a little project from 1 in 1 for ITP30.

I want people to be able to drop their avatars into an open space and drag as far or close as they want to others. or something like that. here’s something. drop your avatar in and get close to Red.

We rely heavily on our vision to identify change. We see sand accumulating at the bottom of the hourglass. We see the minute hand rotate clockwise. How would our sense of time change if we cast time to another sense?

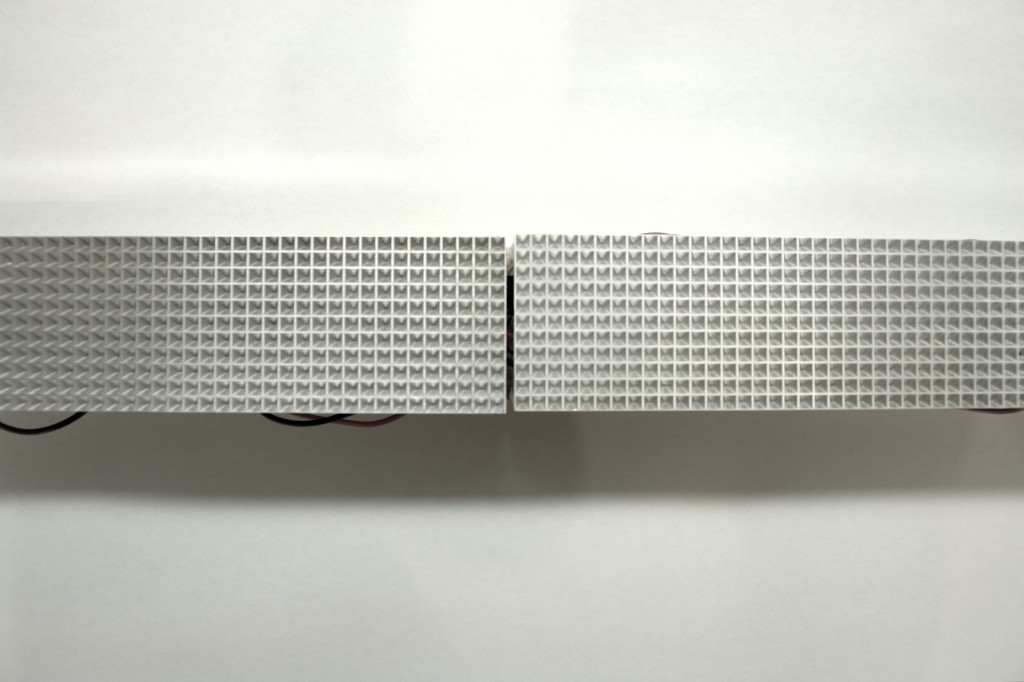

Thermal Clock is a timepiece that positions heat along a bar over a 24 hour cycle to tell time.

Using an array of peltier junctions, heat is emitted from a focused area moving from left to right along the bar over the course of a day.

Thermal Clock from che-wei wang on Vimeo.

Time is our measure of a constant beat. We use seconds, minutes, hours, days, weeks, months, years, decades, centuries, etc. But what if we measured time against rituals, chores, tasks, stories, and narratives? How can we use our memory, prediction, familiar and unfamiliar narratives to tell time?

As a child, I remember using the length of songs as a way to measure how much time was left during a trip. A song was an appropriate period to easily multiply to get a grasp of any larger measure like the time left until we arrived to our grandmother’s place. The length of a song was also a measure I could digest and understand in an instant.

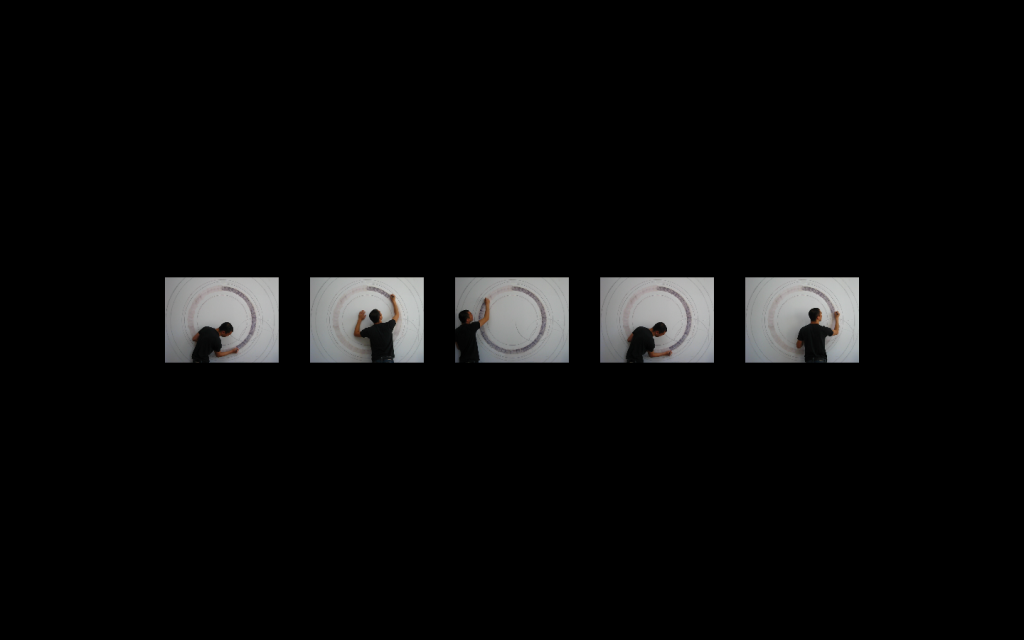

The first iteration of Cinematic Timepiece consists of 5 video loops playing at 5 different speeds on a single screen. The video is of a person coloring in a large circle on a wall.

The frame furthest to the right is a video loop that completes a cycle in one minute. The video to the left of the minute loop completes its cycle in one hour. The next completes in a day, then a month, then a year.

Through various iterations, we intend to experiment with various narratives and rituals captured in a video loop to be read as measures of time.

The software was written in OpenFrameworks for a single screen to be expanded in the future for multiple screens as a piece of hardware.

Cinematic Timepiece is being developed in collaboration with Taylor Levy.

Download the fullscreen app version [http://drop.io/cinematicTimepiece#]

Time in Six Parts is a series of attempts to unravel and re-present time through alternative perspectives. Â The hope is to demystify scales of time that are out of our immediate reach and explore new approaches to marking time.

The 21st Century Confession Booth is a service for anyone to anonymously unload the burden of a sin or secret. Confessions can be dropped off by entering them on the web at confessionbot.com , by calling 718-406-0483, or by finding one of our public confession microphones. Messages are anonymously transcribed and posted to twitter.com/confessionbot.

Behind the scenes, the phone system is using quicktate.com’s api to transcribe speech to text and a custom php script to deliver the confession to twitter.

Who do you have a crush on?

Did you lie today?

Who do you secretly hate?

Who do you secretly love?

Who do you envy?

Have you cheated?

How much was your last tip?

What’s the dirtiest thing you’ve ever done?

Do you secretly like KFC?

How about that hair in your bf’s back?

Do you pick your nose with your gf’s tweezers?

What sites you visit when you are home alone?

Did you really miss the train? really?

Do you like tomato based sauces?

Do you cheat on crosswords?

Did you eat junk food everyday?

Did you finish your room mates’s milk again?

Are you really taking the pill?

Do you like her apartment?

Did you change your underwear today?

What teacher would you have an affair with?

Leave a confession and get on with your day.

icantstopthinkingofyou.com redirecting to icantstopthinkingofyoutoo.com redirecting to

icantstopthinkingofyou.com redirecting to icantstopthinkingofyoutoo.com

with Celina Alvarado

plife at IAC from che-wei wang on Vimeo.

P.Life is a large scale interactive screen designed for the IAC’s 120”² wide video wall. In the world of P.Life, Ps run across the 120′ screen frantically moving material from one house to another. Along the way, Ps exchange pleasantries (based on text message inputs) as they pass by each other, offering a helping hand to those in need. The landscape shifts and jolts based on audio input from the audience, tossing Ps into the air. Playful jumps into midair often end in injury, forcing them to crawl until a fellow P comes by to help out.

Features

Text messaging to create new characters of different sizes and dialogues.

Audio input to influence landscape

Performance backend to influence landscape

Ps move with life-like motion as they walk, jump, fall, run skip, crawl, carry boxes, push boxes, etc.

P.Life is written in OpenFrameworks and uses the Most Pixels Ever library

By Che-Wei Wang and Jiaxin Feng

Live Music by Taylor Levy

photos by wuyingxian

Elevator P interprets random conversation in an elevator into poetry and publishes them immediately on twitter. Using a hidden microphone, Elevator P captures unexpected chatter, un-staged and raw. The interpreter elevates mundane elevator conversations into beautiful flowing poetry capturing the deep essence of each dialogue.

Haiku Poetry.

http://twitter.com/chatterbot

Turf Bombing is a location-based war game which rewards and encourages traveling to and learning about different neighborhoods.

TheNewVote.com is a location-based SMS polling system and place to post photographic proof of election day votes.

Text Sandbox is a set of inline editable text boxes for your editing pleasure. Enjoy.

Pixel Sandbox is a live pixel whiteboard. Click on a pixel to change its color and draw something. Together.

Tilting my laptop changes the background color of a div element on a webpage. [Live Tilt]

An applet reads tilt sensor values from my laptop, posts them to sensorbase.org, then a webpage running ajax reads the sensor values and changes a background color. LIVE.

It’s super slow right now because I can’t figure out how to get sensorbase to only send me the latest value in the dataset, so i have to poll through a ton of values until i reach the end of the set.

P.Life is a large scale interactive screen designed for the IAC’s 120′ wide video wall. In the world of P.Life, Ps run around growing, living, and dying, as the landscape continuously changes creating unexpected situations challenging their existence.

Scenario

Screen fades from black to dawn and rising sun along a horizon. The bottom third of the screen shows a section through the landscape cutting through underground pipes, tunnels, reservoirs, etc. Towards the top the surface of the landscape is visible as it fades and blurs into the horizon and sky.

A few Ps wander around the flat landscape. A number appears on screen for participants to send an SMS message to with their name. As participants send SMS messages, more groups of Ps appear on screen representing each SMS and wander across the landscape. The landscape begins to undulate as the audience interacts with the screen, creating of hills, valleys, lakes, and cliffs. Ps running across the landscape fall to their death as the ground beneath their feet drops or ride down the side of a hill like a wave as a hill moves a cross the screen like a wave. Ps that fall to their death slowly sink into the ground and become fertilizer for plant-life, which is then eaten by other families of Ps allowing them to multiply.

Features

SMS listener to make new families of Ps

An array of IP cameras to transmit video for screen interaction

Background subtraction to capture the audience’s gestures

or Open CV with blob detection or face detection to capture the audience’s gestures

or IR sensors to capture the audience’s gestures

or Lasers and photo-resistors to capture the audience’s gestures

Multi-channel audio triggers for events in P-Life based on location

Background elements and landscape speed through sunrise to sunset in a 3 minute sequence

Ps with life like motion as they walk, jump, fall, grow, climb, swim, drowned, die, stumble, flip, run, etc.

pixelated stick figures? large head?

Simple 8bit game-like soundtrack

Various plant-life grown from dead Ps

Precedents

Lemmings, N for Ninja, Funky Forrest, Big Shadow, eBoy, Habbo

Technical Requirements

IP camera array

Mulit-channel audio output

Our instructable on Chaam is up! Play!

Charles Amis is the inventor of Chaam.

Chaam from che-wei wang on Vimeo.

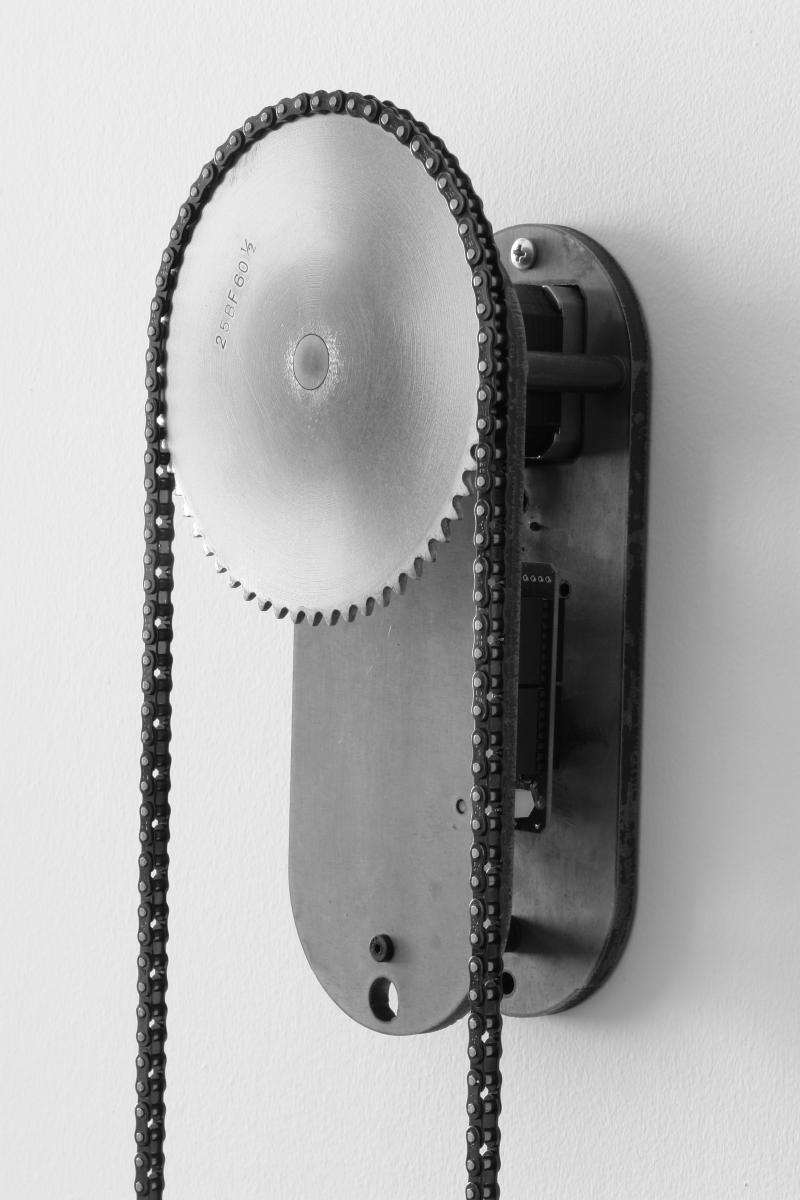

Tetherlight is a hand held light that perpetually points at its sibling. Two Tetherlights constantly point at each other, guiding one Tetherlight to the other with a beam of light.

Tetherlight: Prototype 02 Rotation from che-wei wang on Vimeo.

The devices are each equipped with a GPS module, a digital compass, and a wireless communication module to locate, orient, and communicate its position to the other. They each calculate the proper alignment of a robotic neck to point a light in the other’s direction. In order to maintain the light’s orientation, an accelerometer compensates for the device’s tilt.

Tetherlights are for loving spouses, cheating spouses, girlfriends, boyfriends, children, pets, bags of money, packages, and pretty much anything that you would want to locate at a glance. An ideal use of Tetherlights would be in a situation where two people or two groups of people need to wander, but also need to locate one another in an instant. In a hiking scenario, a large group might spit up to accommodate different paces. With Tetherlights, understanding one’s whereabouts in relation to the other group is represented spatially with a bright light in the appropriate direction.

Tetherlight attempts to make one’s relation to a distant person more immediate by making a physical pointer instead of an abstract maps. With traditional maps, people need to communicate their positions, orient their maps, locate a point on the map, then look up in that direction. Tetherlight does it in an instant. The difference is like looking at a map to see where your uncle lives or having a string that’s always attached to him.

If you’re interested, here’s the Arduino Code: Tetherlight06xbeeGPS.pde

FeedBack PlayBack is an interactive, dynamic film re-editing/viewing system that explores the link between media consumption and physiological arousal.

This project uses galvanic skin response and pulse rate to create a dynamic film re-editing and veiwing system. The users’ physical state determines the rhythm and length of the cuts and the visceral quality of scenes displayed; the user’s immediate reactions to the scenes delivered, feeds back to generate a cinematic crescendo or a lull. This project exploits the power of media to manipulate and alter our state of being at the most basic, primal level, and attempts to synchronize the media and viewer– whether towards a static loop or a explosive climax.

In a darkened, enclosed space, the user approaches a screen and his or her rests fingertips on a pad to the right of the screen. The system establishes baseline for this users physiological response, and re-calibrates. Short, non-sequential clips of a familiar, emotionally charged film– for example, Stanley Kubrick’s 1980 horror masterpiece “The Shining” –are shown. If the user responds to slight shifts in the emotional tone of the media, the system amplifies that response and displays clips that are more violent and arousing, or calmer and more neutral. The film is re-edited, the narrative reformulated according to this user’s response to it.

Feedbak Playback is by Zannah Marsh and Che-Wei Wang

Galvanic skin response readings are simply the measurement of electrical resistance through the body. Two leads are attached to two fingertips. One lead sends current while the other measures the difference. This setup measures GSR every 50 milliseconds. Each reading is graphed, while peaks are highlighted and an average is calculated to smooth out the values. A baseline reading is taken for 10 seconds if the readings go flat (fingers removed from leads).

The ankle support is a low profile sleeve around the foot and a strap at the heel to connect to the air muscle. A shorter 12″ muscle connects the ankle support to the calf. The calf attachment is now constructed out of a single piece of leather with nylon reinforcement at the top to prevent buckling and stretching when the air muscle is actuated. It’s ideal to have the solenoid valve as close to the air muscle as possible, so I’m going to have to make room for that somewhere on the calf.

After the first round of testing, I realized assisting jumping with pneumatics is nearly impossible. The size of the tubes and tank necessary to get a high enough cfm to actuate the air muscles at the speed of jumping seems too large for a lightweight wearable application. So, I’m going to concentrate on assisting walking. Maybe speed walking.

Soft Exoskeleton V01 Test from che-wei wang on Vimeo.

I finally have a full assembly of all the major components sewn with thick canvas and leather. The small scuba tank provides the air pressure, controlled by an Arduino and solenoid valves. The basic operation of pneumatic muscle works. Air pressure at about 100psi inflates the muscle, creating a contracting motion of about 2 inches, enough to make my leg kick out (although the speed of inflation needs to be faster).

The harness for the scuba tank and a guide for the pneumatic muscle are still issues to be dealt with. The strap for the thigh needs to extend further down to help guide the placement of the pneumatic muscle and the scuba tank needs to be attached in a more comfortable way.

I finally got the solenoid valve hooked up to an air compressor and and arduino board. Air pressure is regulated at 100 psi. It looks like I’m going to need bigger pipes to get more cfm for faster muscle actuation. The shorter muscle has an over sized braided sleeve, which I thought might help the actuation distance, but it seems like it just takes more air to fill up and doesn’t make a noticeable difference.

Pneumatic Muscle: Solenoid Valve Test 02 from che-wei wang on Vimeo.

((image manipulated from original by manitou2121, an interesting composite of faces from hotornot.com))

((image manipulated from original by manitou2121, an interesting composite of faces from hotornot.com))

Why should I trust you? Because you sound trustworthy? Smell trustworthy? Look trustworthy? How do we measure trust?

Trust Vision is a personally biased trust measuring video camera. As each frame is presented, a face or faces within the frame are superimposed with trust ratings based on previous faces that have been rated by the user. Over time, the computer creates a mental map of facial features of a trustworthy individual. The real-time analysis of facial features is aided by the user’s bias, as he or she discretely changes the trust ratings of individuals on the screen. Changes in the mental map may “out” a previously trusted person or improve someone’s standing.

((image: pmorgan ))

((image: pmorgan ))

Windows give a frame of reference, crops a view, magnifies a perspective. It filters and blocks. Window is the opening that mediates here from there. I would argue that windows are what give walls meaning. Without windows, there wouldn’t be a notion of “there” since the possibility of something else on the other side wouldn’t exist without the opening. Windows provide a glimpse, a frame, a blur, a dimension of something more, a thought into a different time and space. A prisoner in solitary confinement opens a window in his mind to keep his sanity, while the Queen of England peeks out her picture frame window, checking the weather outside.

The biggest dilemma of the window is the opening itself. We’d like a filter. We want to see what’s there, but we don’t want to hear it, or feel it. Perhaps we have a level of control with the windows in our minds, excluding dreams, nightmares, and synesthesia (leaky mind windows), but physical windows are bound to the performance of material, a challenge since the beginning of windows.

Glass attempts to provide clear views, filter some light, block temperature, and baffle sound, all in the span of a 1/4″ section. We’ve come a long way since the small openings in 15th century castles, yet we still haven’t seen the holy grail of windows. Windows should be thin, structural, fully insulated, sound proof, malleable sheet material that can filter varying amounts of incoming light and views while remaining completely outwardly transparent. Oh, and it should be cheap, lightweight and sustainable. We have low thermal conducting material (Aerogel), fiber optic skylights (Parans Solar Lighting), lightweight skins (ETFE / Water Cube Skin), self-cleaning glass (Pilkington Activ), LCD privacy glass (Privalite), time-space warping windows (Khronos Projector), illuminating glass (Lumaglass), non-reflective glass (Luxar), breathing skins (Living Glass), living organic envelopes (Breeding Spaces), interactive storefronts (Displax), thin-shell frameless glass, the list goes on. So would a combination of these give us the ultimate window?

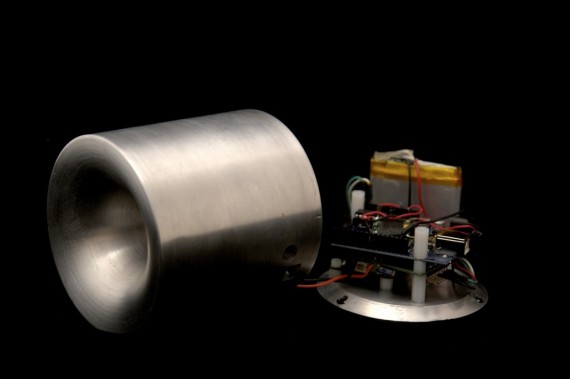

Here’s a sketch of how the parts might fit to get the laser to freely point in any direction. 4 servo motors work in tandem to tilt the laser head. Batteries and the circuitry is stored in the bottom half of the cylinder. I was going to have a mechanical gyro to have it orient itself to the ground, but the form seemed too restricted, so I’m opting for a gyro sensor to deal with orienting the device to gravity.

Video chat is great, but maybe I don’t want to see your face. Or more likely, I don’t want you to see me sitting at my computer in my underwear. A less intrusive channel of communication, like IM, is often desirable but we lose several modes of expression when we only communicate with text. Could a greater sense of presence be transmitted through our most common computer interfaces (keyboard, mouse)? Many of us chat with IM, but using CAPS and emoticons to communicate, lack a level of fidelity to properly transmit a range of emotions. Without any training or instruction, we already convey a large set of emotions towards our devices to express ourselves. We often express our feelings to devices closes to us. We slap our TV, pet our computer, and slam our mouse with frustration, yet these common expressions are ignored. What if we could open a channel of communication for your mouse? What if your mouse gestures could transmit your feelings across to your friend?

TeleCursing is a chat plugin that takes your mouse cursor and simply places it on your friend’s screen. A thin line is drawn on the screen connecting the mouse cursors within proximity. With the cursors on the screen, you can flirt, scribble with frustration, hold hands, play tag, or just know when your friend is active on the other end. The cursor could be customize to be shown in a less distracting translucent cursor or in a more lively avatar-like way with animations based on mouse vector, proximity and clicks.

Other multimouse hacks: DualOsx v.1(hoax?), MPX: The Multi-Pointer X Server, SpookyAction [video]

The first set of home brew pneumatic muscles actually work. There’s no leakage and it looks like it holds well over 60 psi. The tiny air reservoir barely holds enough air for 2 actuations, so it looks likes a small scuba tank or a tiny air compressor is going to be needed. Weight tests coming soon.

Pneumatic Muscle : Pressure Test from che-wei wang on Vimeo.

Here’s my first set homebrew pneumatic muscles. I’m not sure how strong these are going to be, but it looks promising. The compression fittings aren’t being used the way they were meant to be used, so it’s likely that connection will be the first point of failure when it goes under stress.

As soon as the assembly is tested, I’ll be posting instructions on how to make your own.

The Laser Tether is a hand held laser pointer that perpetually points at its sibling. Two Laser Tethers constantly point at each other no matter where they are on the globe. If my cousin in Beijing had a Laser Tether and I turned on mine in New York City, our lasers would point towards our feet as the lasers draw a straight line through Earth between my cousin and myself.

The devices are each equipped with a GPS module, a digital compass, and a cell network module to locate, orient, and upload its location to an online database. They then calculate the proper alignment of their lasers in relation to the other. In order to maintain an orientation that is always perpendicular to the ground, the lasers are mounted on a self leveling mechanism similar to existing laser levels like the DEWALT DW077KI.

Possible uses for the devices are for loving spouses, cheating spouses, girlfriends, boyfriends, children, pets, bags of money, packages, and pretty much anything that you would want to locate at a glance. Although potential uses are many, the scenarios I have in mind are for hiking, sailing, driving, caravaning, patrolling, playing tag, jogging, getting lost in crowds, hunting, tracking, spying, and battle strategy coordination. An ideal usage of the Laser Tether would be in a situation where two people need to wander, but also need to locate one another in an instant.

With GPS units becoming more pervasive, many GPS tracking applications have databases of people’s known locations. The emerging debate on privacy and accuracy are a major concern ((Katina Michael, Andrew McNamee, MG Michael, “The Emerging Ethics of Humancentric GPS Tracking and Monitoring,” icmb, p. 34, International Conference on Mobile Business (ICMB’06), 2006)), while the abstract representation GPS data in maps fail to convey a strong sense of immediacy and relevance. These applications like Navizon and Mologogo are open to large online communities and are visualized on maps. Common commercial applications of GPS tracking devices are used for fleet control of taxis and trucks, animal control, race tracking, and visualization. ((GPS tracking. (2008, January 27). In Wikipedia, The Free Encyclopedia. Retrieved 04:39, January 30, 2008, from http://en.wikipedia.org/w/index.php?title=GPS_tracking&oldid=187351992))

The Laser Tether attempts to make one’s relation to a distant person more immediate. The difference is like looking at a map to see where your uncle lives versus having a string attached to him.

Pneumatic systems are clean, safe, lightweight, and reliable. In a pneumatic electronic hybrid system, electric components simply control the flow of air pressure, removing the burden of weight and kinetic actuation from electrical power to pneumatic power. The advantage is a lightweight low idle power system with high power kinetic impact.

My initial encounter with pneumatic systems like inflatable shelters and floatation devices exhibited an enormous potential for creating lightweight systems that can be reconfigurable and transportable. Pneumatic systems are used in various critical applications that require immediate response, reliability and flexibility. Air bag systems, inflatable life vests, and self-inflating rafts are of particular interest to me.

In terms of pneumatic systems as a wearable technology, a few possibilities come to mind (nomadic shelters, impact protection, an expressive suit, and a powered exoskeleton, just to name a few). Each have potential uses that interest me and many have been explored by others. Motoair sells pneumatically actuated vests and jackets for motorcyclists and other high impact sports to cushion accidents. ((Motoair, MOTOAIR, Jan 28.2008, <http://www.motoair.com/>)) Powered exoskeletons, currently developed within research groups around the world, are focused on assisting human locomotion through a wearable machine. ((exoskeletons: http://bleex.me.berkeley.edu/index.htm, http://www.newscientist.com/article.ns?id=dn1072, http://spectrum.ieee.org/print/1974, http://www.youtube.com/watch?v=0hkCcoenLW4)) Actuated parts of the machine coincide with the body and gross muscle groups to help lift heavy loads. A suit for the upper extremities has been created by Hiroshi Kobayashi, a roboticist from the Science University of Tokyo. ((BBC. BBC News: Health, Jan. 28 2008 <http://news.bbc.co.uk/1/hi/health/2002225.stm>)) Dr. Daniel Ferris and Dr. Riann Palmieri-Smith lead a group of researchers at the University of Michigan in creating pneumatically powered exoskeletons for the lower limbs. ((Human Neuromechanics Laboratory, Dr. Daniel Ferris and

Dr. Riann Palmieri-Smith. Jan. 28,2008.

Features of the wearable system

The system will be powered by a pneumatic reservoir and triggered by the user’s motions or an external switch to activate the system only at desired moments. An electronic circuit, powered by a battery will sense and trigger movement through a series of artificial muscles. Pneumatic muscles work by inflating a silicon tube within a plastic braided sleeve. The inflation of the tube shortens the overall length of the assembly as the braided sleeve increases radially. ((Lightner, Stan, et al. The International Journal of Modern Engineering. Volume 2, Number 2, Spring 2002, Jan.28 2008 <http://www.ijme.us/issues/spring%202002/articles/fluid%20muscle.dco.htm>))

Pneumatic muscles are a relatively recent development in air powered actuation, lead by the Shadow Robot Company, and FESTO Corporation. They were originally commercialized by The Bridgestone Rubber Company in the 1980’s. ((Lightner, Stan, et al. The International Journal of Modern Engineering. Volume 2, Number 2, Spring 2002, Jan.28 2008 <http://www.ijme.us/issues/spring%202002/articles/fluid%20muscle.dco.htm>

Air Muscle videos:

The Pneumatic Soft Exoskeleton is worn by strapping components of of the system on parts of the leg to align the assistive muscle to major muscle groups in the leg. By attaching a few pneumatic muscles to assist gross movement of the lower extremities, properly timed actuation of the assisting muscles can add to the user’s own movements to achieve greater results in speed and power. Each assistive muscle would coincide with existing muscle groups and transfer power to tension lines that wrap around the leg in such a way that the forces transfer in a similar fashion to the muscle-tendon-bone hierarchy. The quadriceps femoris muscle group will be the primary group of focus. As the system is perfected, other muscle groups will be identified and addressed.

Components of the system

The system consists of pneumatic muscles of varying sizes which attach to the main wearable frame. The wearable frame is a large fabric that is wrapped tightly around the thigh and crus. The frame consists of straps that are sewn in a pattern to distribute forces from muscle-to-tendon junctures to the rest of the frame. The straps are collected at several junctures to accept a tension connection from a pneumatic muscle.

Air flow of each pneumatic muscle is controlled by a single tube from a 3-way solenoid valve which controls the air flow in and out of the pneumatic muscle from a portable air reservoir worn at the hip of the user. Each solenoid is controlled by outputs from a battery powered Arduino board. Switches from the user’s inputs are fed to the Arduino board to control the actuation of the artificial muscles.

Uses

Potential uses for the Pneumatic Soft Exoskeleton follow much of the current applications for powered exoskeletons. These wearable machines can assist lifting and locomotion. The added benefit of the Pneumatic Soft Exoskeleton is its weight and flexibility. By making the system lightweight and soft, its appearance is less obtrusive and less of a burden to fit to the body. The system is potentially more affordable and highly customizable because of the simplicity of the components.

Technical concerns

The Pneumatic Soft Exoskeleton must be lightweight and perform reliably to assist locomotion. Since the system relies on an air reservoir, it is likely that a user may find the system insufficient in its capacity to perform continuously. This concern can be address with a larger reservoir, but that would add more undesirable weight and volume.

There may be a potentially harmful side-effect to the body due to repeated unfamiliar stress on bones and muscles. The softness of the system is intended to dampen any impact that may be harmful, but repeated stress points due to the power of the assistive muscle or the location and transfer of forces to the limbs may be damaging.

Precedents

Here’s a nice intro to the subject from engineeringtv.com

Human Neuromechanics Laboratory at The University of Michigan

HAL at the University of Tsukuba

Muscle Suits at Koba Lab

Wearable Power Assist Suit at Kanagawa Institute of Technology, Robotics and Mechatronics

Talking Face to Face is a networked communication system that detects the position of two users in relation to each other anywhere on the globe. Using a combination of GPS and a compass, the audio output is modified to create the sensation of the voice’s position and proximity to the listener. If Bob is in NYC and Sarah is in LA, their voices would sound relatively distant to each other. Bob would face west and Sarah would face east so their voices would sound as if they were facing each other. If Bob turns away, Sarah’s voice would sound like they were no longer facing each other. As Bob and Sarah turn their positions the voice they hear will gain reverb, volume, and other effects to simulate their relative presence in a spacial environment.

We are curious beings that need and want confirmation of our existence and presence. We like to explore, yet we want to stay at home. We yearn for freedom, difference and change, yet we find comfort in familiarity. I believe it’s this dilemma that telepresence aims to solve.

The presence stack:

Physical sensory input of a moment,

channeled through nerve paths to the brain,

processed and compared to a set of memories of the sensation,

judgments constructed on the presence of the sensation or the realness of the input,

and predictions constructed of the next sensation.

Is what i see, smell, feel, hear, or taste, real? is it out of the ordinary? What is real and not real? Is this moment not the moment I expected to follow the last moment?

Presence deals with and persists in change. Our presence cannot be static. Presence cannot remain in the past or exist in the future. We can only continuously observe and predict changes within our current moment based on our past experiences.

Being present is a combination of sensations that confirm our prediction of where we are spatially and mentally. If I were to sense or experience a moment that is out of the ordinary and a magnitude beyond what I expect, I would question the reality of my environment in that moment. In other words, if my prediction of the near future is shattered by an extraordinary experience outside my threshold of reality, my presence comes into question. For example, if I were to approach my sink and turn on my faucet and nothing comes out, something unexpected has happened, but I’ve had similar experiences in the past, so I can imagine what had gone wrong and find a solution. On the other hand, if I go to turn on the faucet and butterflies fly out, the presence of the faucet in my reality or my presence in this reality would be questioned. Is that real? Is this real? Could this be happening now? Am I dreaming?

We are often willing to accept the presence of other people with only a fraction or glimpse of their entire presence. I often mistake a mannequin in my peripheral vision as a real person, only to be surprised when a closer look reveals its lifelessness. I can also fool myself into believe I’m somewhere else no matter how hard I try to resist when I listen to binaural recordings of a previous space and time. We are willing to complete the picture for ourselves because we try to match previous experiences to our current experience to predicting how our environment should be. Could implied presence by a mannequin persist over a long period of time? Can audio input be so convincing that we question our mismatched vision?

In each confirmation of another’s presence, i think we are looking for life. Is the other alive? The classic horror movie scenario of walking down a hall of medieval armor statues questions how we determine the presence of another being. Is someone wearing the armor and standing very still, or is it empty and lifeless? The presence of a ghost or prankster is only confirmed when something moves. Or is that enough? If their axe drops to the ground, would we assume someone deliberately dropped the axe, or did the shifting of my weight on the creaking floors cause the accident? Would a glimpse of a pair of eyes confirm our suspicions of someone’s presence? Would the sound or smell of someone’s breath convince us? Whether it is a single clue or a combination of movement, sight and sound, we are looking for a confirmation of life.

If I follow my brother into a dark basement, i would continually feel his presence no matter how dark or silent the room may be. Even if he didn’t respond to me calling his name, i would still expect him to be there when i turn the lights on. Perhaps he’s ignoring me or playing a game with me. It would be a hard stretch in our imagination and a break in our reality if we turned the lights on and he was nowhere to be found. Perhaps I would even search for him in the basement behind the shelves and behind the boxes. Without some indication of him exiting the basement like the sound of the door closing or the sound of his footsteps, his presence is automatically completed by a single instance of his existence before we entered the basement. Our imagination wants to complete our senses to detect his presence.

We want presence and will stretch our imagination to detect presence.

CFOF is an audio to visual translator that maps audio frequencies and volume onto a minimal surface (Catalan’s Surface).

AGS aka Haiku Shake is a haiku generator. Tilting to the left begins the trickling of the haiku. Each haiku is generated randomly based on 3 haiku forms and a set of preselected words. To generate a new haiku, just shake it.

4 layers of industrial felt hold all the pieces together. 4 AA Batteries, one arduino with an accelerometer and an LCD panel.

AGS aka Haiku Shake is a haiku generator. Tilting to the left begins the trickling of the haiku. Each haiku is generated randomly based on 3 haiku forms and a set of preselected words. To generate a new haiku, just shake it.

Make: ITP Winter show

more coverage on the ITP Winter Show

bits at nytimes.com: Object-Oriented Objects at N.Y.U.

Here’s a little write-up on momo and the 2007 ITP Winter Show at nymag.com.

nymag.com : MoMo Isn’t Exactly the New Seeing-Eye Dog, But He Sure Is Cute

Momo is a haptic navigational device that requires only the sense of touch to guide a user. No maps, no text, no arrows, no lights. It sits on the palm of one’s hand and leans, vibrates and gravitates towards a preset location. Akin to someone pointing you in the right direction, there is no need to find your map, you simply follow as the device gravitates to your destination.

momo version 4 now has a sturdier lighter frame. A couple switches were added to control power to arduino and to the GPS unit. The whole unit somehow runs off 4 AA batteries instead of 8. I also realized the compass unit is not as accurate as we’d like. It has a sour spot around 180-270 degrees where the measurements are noticably off by several degrees, due to electromagnetic interference from the nearby motors.

momo version 4 (pov) from che-wei wang on Vimeo.

momo beta scenario 01 from che-wei wang on Vimeo.

moMo V2 : compass motion 01 from che-wei wang on Vimeo.

Precedents:

http://www.beatrizdacosta.net/stillopen/gpstut.php

http://www.mologogo.com/

http://mobiquitous.com/active-belt-e.html

http://news.bbc.co.uk/2/hi/technology/6905286.stm

http://www.freymartin.de/en/projects/cabboots

-new compass unit calcuates true north in relation to itself and waypoint (so it points in the right direction even if you spin it)

-upgraded to 32 Channel!! ETek GPS module

-4 AA batteries powers the entire circuit with 5.6V at 2700mha

-a new skeleton ( to be outfitted with a flexible skin soon )

-updated code for smoother motion, although it’s soon to become obsolete as we move into a more gestural configuration ( like Keepon ) without the deadly lead weight.

“What is ITP?” was originally presented at ITP at NYU in the “Applications of Interactive Technologies” class November 6, 2007. Produced by the red GOAT team: chris cerrito, ja in koo, eduardo lytton, kim thompson rodrigo de benito sanz, xiaoyang feng, ami snyderman, and che-wei wang.

Grass Type is a virtual grass mat. Mouse movements send waves of wind across the grass, erasing the imprinted text which then slowly reappears over time as the wind dies down. (developed with Tim Stutts)

Download grasstextapp.zip GrassTypeAdobe.app.zip (for Macs written in C and openGL).

SlaHuRa or Slappable Huggable Radio is a FM and AM radio that uses an accelerometer (tilt sensor) to change volume and frequency. There are no buttons to press or dials to spin. Tilting the radio from side to side adjusts the frequency. Tilting it from front to back changes the volume.

Slightly updated code for incorporating vibe control via serial.

Here’s our Processing sketch for taking a live GPS feed of some guy in Kansas that never seems to move. The applet displays his location, our fixed location and controls MoMo, our haptic navigation device via serial connection.

[HND: Live GPS]

Step on board. Metallic cocoon. Shielded space. Muffled noises. Is there less inside or outside? I’m here. Beep at me. Do you know I’m staring at you? Noises and patterns breed deafness and sameness. Roaring sounds from the back shifts its pitch up and down. Coughs, sneezes, whines, and screams. It’s quiet. Or am I just numb? Nobody speaks. The driver doesn’t announce the stops. Spaces shift. Volumes collide. Everyone is just here to transport their motionless bodies.

I’m working on an interactive grass mat with Tim Stutts. Using the subtleties in the grass movements, we can imprint text or figures into realistically rendered grass, projected from above. Here’s my first step. . .

Updated Here: Grass Type

A haptic navigational device requires only the sense of touch to guide a user. No maps, no text, no arrows, no lights. HND sits on the palm of one’s hand and leans, vibrates and gravitates towards a preset location. Imagine an egg sitting on the palm of your hand, obeying its own gravitational force at a distant location. The egg magically tugs and pulls you. No need to look at any maps. Simply follow the tug.

This is what we want to make. (Eduardo Lytton, Kristin O’Friel + me)

The possible user scenarios that can come out of this device range from treasure hunts to assistive technology for the blind.

Possible methods of creating the sensation of pull or tug:

Weight shifiting via servo motors

Vibrations motors

Gyroscopes | Gyroscopes.org

Precedents:

http://www.beatrizdacosta.net/stillopen/gpstut.php

http://www.mologogo.com/

http://mobiquitous.com/active-belt-e.html

http://news.bbc.co.uk/2/hi/technology/6905286.stm

http://www.freymartin.de/en/projects/cabboots

Instigate change.

Observations on navigating and searching using cold, cooler, warm, warmer, and hot.

At certain intersections, the city offers a push button at the pedestrian crosswalk with a sign that reads, “To cross street, push botton, wait for signal, wait for walk signal.”

Hypothesis: People love to push buttons. It give us a sense of control. So, people push the crosswalk button. And perhaps they push it several times to make sure it’s been pressed. They’ll keep checking for the green light to get a confirmation that the button had caused a series of events that will lead to a signal change in the very near future. People may also have slow responses to the changed green signal.

Site:Location: 2 crosswalks 50′ apart, each with an island along the mediation strip between 6 lanes of vehicular traffic at Christopher and West St. Approximately 50 paces to cross. 1:30pm, 9/17/2007

Site:Location: 2 crosswalks 50′ apart, each with an island along the mediation strip between 6 lanes of vehicular traffic at Christopher and West St. Approximately 50 paces to cross. 1:30pm, 9/17/2007

Scenario #01:

Single male approaches red light. 25 years old.

25 seconds into waiting, stares at incoming traffic

44 seconds into waiting, stares across street, perhaps at traffic signal

58 seconds into waiting, light turns green. Begins walking immediately. (52 paces to the other side of the street)

It seems like the prefered analogy for voltage, current and resistance uses water with pressured tanks, hoses, etc. The waterfall analogy was the easiest for me to understand.

If we draw an analogy to a waterfall, the voltage would represent the height of the waterfall: the higher it is, the more potential energy the water has by virtue of its distance from the bottom of the falls, and the more energy it will possess as it hits the bottom. . . If we think about our waterfall example, the current would represent how much water was going over the edge of the falls each second. . . In the waterfall analogy, resistance would refer to any obstacles that slowed down the flow of water over the edge of the falls. Perhaps there are many rocks in the river before the edge, to slow the water down. Or maybe a dam is being used to hold back most of the water and let only a small amount of it through. . . if you think about our waterfall example: the higher the waterfall, the more water will want to rush through, but it can only do so to the extent that it is able to as a result of any opposing forces. If you tried to fit Niagara Falls through a garden hose, you’d only get so much water every second, no matter how high the falls, and no matter how much water was waiting to get through! And if you replace that hose with one that is of a larger diameter, you will get more water in the same amount of time. . . more on Voltage Current Resistance