Category: Arduino

GSR Reader

Galvanic skin response readings are simply the measurement of electrical resistance through the body. Two leads are attached to two fingertips. One lead sends current while the other measures the difference. This setup measures GSR every 50 milliseconds. Each reading is graphed, while peaks are highlighted and an average is calculated to smooth out the values. A baseline reading is taken for 10 seconds if the readings go flat (fingers removed from leads).

Ornos: Prototype 02

Here’s the first test with Ornos. The compass readings are behaving pretty well considering it’s right underneath a spinning hard drive. The 1.2Ghz processor and 512 RAM don’t seem to be enough to download and render the image quickly enough, so I’m going to have to figure out how to speed things up.

Etek EB-85A GPS Example Code

Here’s some example Arduino code for getting a Etek EB-85A module up and reading latitude and longitude (will probably work with most GPS modules). You can purchase a module from Sparkfun.

The module only needs power, ground, rx and tx. Most modules like the Etek start sending NMEA strings as soon as it has power. The Etek module takes a minute or two to get a satellite fix from a cold start in urban environments. Signals drop out once in a while between tall buildings at street level even with DGPS and SBAS. On a clear day, if you’re lucky, you can get a signal sitting by the window in urban canyons.

//Etek GPS EB-85A Module Example

//by Che-Wei Wang and Kristin O'Friel

//32 Channel etek GPS unit

//modified from original code by Igor González MartÃn. http://www.arduino.cc/playground/Tutorials/GPS

boolean startingUp=true;

boolean gpsConnected=false;

boolean satelliteLock=false;

long myLatitude,myLongitude;

//GPS

#include

#include

int rxPin = 0; // RX PIN

int txPin = 1; // TX TX

int byteGPS=-1;

char linea[300] = "";

char comandoGPR[7] = "$GPRMC";

int cont=0;

int bien=0;

int conta=0;

int indices[13];

//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

void setup() {

//GPS

pinMode(rxPin, INPUT);

pinMode(txPin, OUTPUT);

for (int i=0;i Ornos : Prototype 01

I was going to cover the lasercut masonite with a leather sleeve, but I’m going to go with cnc milled RenShape with a painted finish for the next prototype. The compass needs to be calibrated to the offsets caused by the magnetized computer hardware and I need to tweak some code to get the frames to load faster and smoother. I’ll post a video as soon as that part is worked out.

Stabilize : Accelerometer + 4 Servo Motors

So far, the Arduino reads the x and y tilt values and translates them into motion across 4 servos to maintain a horizontal platform at the top. Next, I need to get the z tilt values to reverse the x and y tilts, so you can tilt the whole thing up side down and still have the top platform facing up.

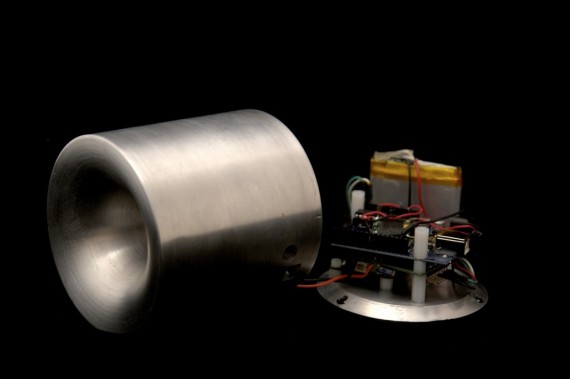

Ornos : A View from Above

Since the first hand drawn maps of the stars to satellite imagery and GPS navigation today, our frame of reference and our perception of space has been molded into a view from above. Our understanding of place is often linked to an abstract representation on a map rather than a physical relational comprehension. You could probably point out Azerbaijan on a map, but how many of us can simply point in its direction across the globe? The image of the globe projected onto a vertical surface is so pervasive, we often associate “up” with north as we project ourselves into a mental image of map.

The accessibility of GPS and online map services have continued to reinforce the “up” vector while creating a greater divide between the physical world and its virtual representations. Today, we view from above, as primarily experienced on our screens, in an elevation view without any regard to its physical context. We project our presence into the screen through multiple translations of orientation. Viewing a map on a computer screen requires one to find a location on the screen that represents a position, then the abstracted orientation of the vertical screen must be translated and scaled into the physical context of the current position. We’ve lost a step in comprehension without the compass and the horizontal map. The traditional map and compass gave an intuitive understanding of a current position in relation to physical space by rotating the map to align with the space it represented. What appeared one inch to the left of my location on the map could be confirmed by looking up to my left.

Ornos is a telescopic view from above. The horizontal screen reconstructs a view from a position directly above itself using satellite imagery and maps. Exploring your current surroundings is as simple as sliding the device on any surface to pan across the globe. Zooming is controlled by rotating the device itself. The onboard digital compass and GPS modules orient the image on the screen to reflect your physical surroundings while satellite imagery and maps are dynamically loaded from Google, Microsoft, or Yahoo.

Ornos : Prototype 01 from che-wei wang on Vimeo.

Here’s the first test with Ornos. The compass readings are behaving pretty well considering it’s right underneath a spinning hard drive. The 1.2Ghz processor and 512 RAM don’t seem to be enough to download and render the image quickly enough, so I’m going to have to figure out how to speed things up.

Micromag 3 Axis

Here’s some modified code from Sensor Workshop to get heading values in Arduino from the Micromag 3 axis magneto sensor. Next up, accelerometer calculations on the magneto sensor to get tilt compensation on the compass readings.

AGS : Haiku Shake V02

AGS aka Haiku Shake is a haiku generator. Tilting to the left begins the trickling of the haiku. Each haiku is generated randomly based on 3 haiku forms and a set of preselected words. To generate a new haiku, just shake it.

AGS : Haiku Shake V01 Shell

4 layers of industrial felt hold all the pieces together. 4 AA Batteries, one arduino with an accelerometer and an LCD panel.

moMo : version 2

-new compass unit calcuates true north in relation to itself and waypoint (so it points in the right direction even if you spin it)

-upgraded to 32 Channel!! ETek GPS module

-4 AA batteries powers the entire circuit with 5.6V at 2700mha

-a new skeleton ( to be outfitted with a flexible skin soon )

-updated code for smoother motion, although it’s soon to become obsolete as we move into a more gestural configuration ( like Keepon ) without the deadly lead weight.

HND: Live GPS and Vibe

Slightly updated code for incorporating vibe control via serial.

A Haptic Navigational Device

A haptic navigational device requires only the sense of touch to guide a user. No maps, no text, no arrows, no lights. HND sits on the palm of one’s hand and leans, vibrates and gravitates towards a preset location. Imagine an egg sitting on the palm of your hand, obeying its own gravitational force at a distant location. The egg magically tugs and pulls you. No need to look at any maps. Simply follow the tug.

This is what we want to make. (Eduardo Lytton, Kristin O’Friel + me)

The possible user scenarios that can come out of this device range from treasure hunts to assistive technology for the blind.

Possible methods of creating the sensation of pull or tug:

Weight shifiting via servo motors

Vibrations motors

Gyroscopes | Gyroscopes.org

Precedents:

http://www.beatrizdacosta.net/stillopen/gpstut.php

http://www.mologogo.com/

http://mobiquitous.com/active-belt-e.html

http://news.bbc.co.uk/2/hi/technology/6905286.stm

http://www.freymartin.de/en/projects/cabboots